Serverless and data stacks

what we can learn by reading an academic paper from Snowflake

Building data warehouse solution can be expensive, because it almost always entails the “big data” scenario. Imagine we invest tens of servers to build a data crunching cluster not too long time ago (~10 years ago) — and that would have been a huge budge planning for the decision makers.

However, building a data warehouse infrastructure nowadays costs you almost nothing other than your business logic related coding efforts at very beginning (your coding effort is your proprietary business asset, which you want to build anyway).

Why you may want to read an academic paper from Snowflake

Snowflake has become a new “platform” for data engineering teams (think of the “platform” like Windows) and a lot of data applications have been built on top of it.

I always felt an academic paper (rather than marketing white papers) written by industrial leaders can shed lights on the first principles behind the trendy topics — reading the academic paper (VLDB 2016) written by the Snowflake founders and core team members gives us the chance to revisit the pioneers’ original vision and how they envisioned the use cases they wanted to facilitate.

And let’s discuss something that paper talked about.

Data warehouse compute engine goes serverless

Just like lambda functions in AWS come and go to serve event triggers, the data processing unit in Snowflake come and go in a similar manner

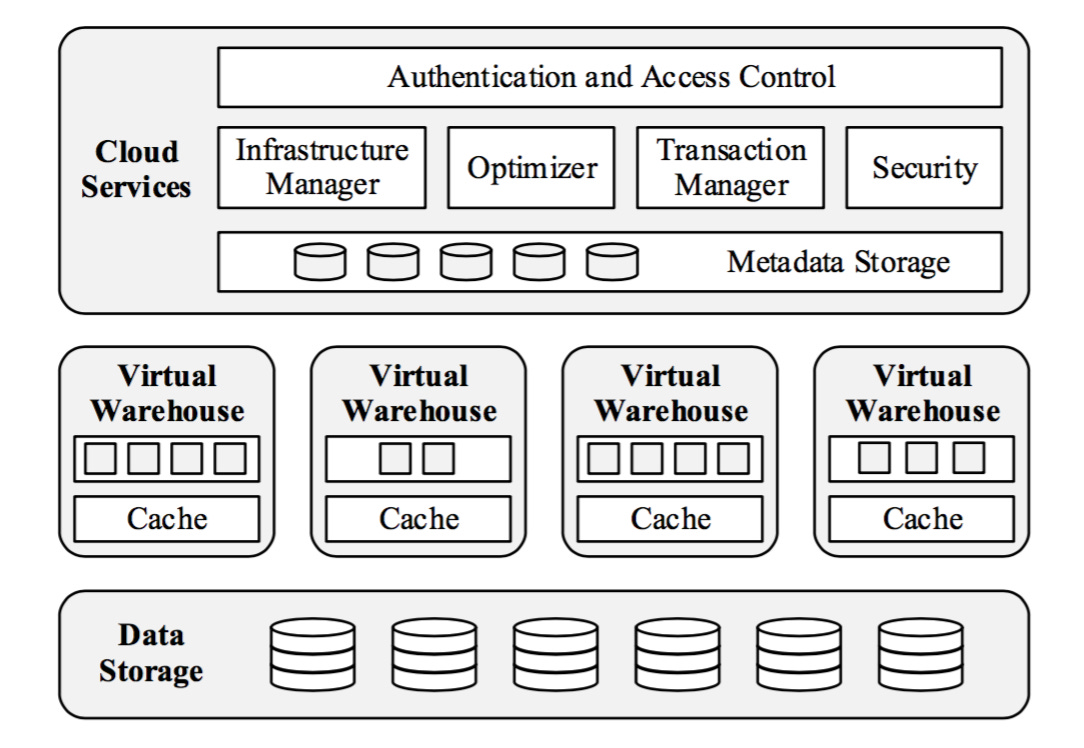

(read Section 3.2 in the paper) Virtual Warehouses

Think of this layer of component as their “lambda”. Here is the analogy:

Instead of planning a N-node cluster as your app tier service (and you pay hundreds dollars per month), your app tier lambda come and go; similarly,

Instead of planning a N-node cluster as your data warehouse (and pay upfront thousands dollars per month), your data crunching nodes come and go

To users, it feels like “serverless”.

Cloud Object storage provides infinite storage

Object storage is the new storage “media” provided by cloud vendors, be it AWS S3, Azure Blob Storage, and Google Cloud Storage — this type of storage shares the common properties: 1) low cost and 2) infinitely scalable, comparing to traditional local storage or local storage clusters.

NOTE: in the time the paper got published, Snowflake builders only built it on AWS (but today in 2021, it works across the 3 major clouds)

Both properties enable us to build infinite scale data warehouse from storage capacity perspective without worrying about blowing up our budget too early.

The cost model of “serverless” cloud data warehouse

Because of the separation of storage layer (low cost and infinitely scalable) and compute layer (pay per usage, come and go model like AWS lambda or Google cloud functions)

(read Section 2 in the paper) STORAGE VERSUS COMPUTE

the “serverless” cloud data warehouse vendors can offer a really attractive pricing model:

you always pay your storage (but it’s the low cost part)

you only pay your true usage of the computing (but you don’t pay idle cycles)

And as a result, your organization’s decision makers do not need to feel pain in the neck when deciding whether to invest in the data warehousing solutions because:

Zero upfront commitment

Still low cost even if your data warehouse contains huge data volume

True pay-per-use model

Actually, once these attractive pricing model comes true, the decision making can become a lot easy and agile — a data engineer or a developer could even start influencing the organization by showing real PoC results, and become a 10x engineer.

Other “serverless” data warehouse

What are other data warehouse platforms that can be categorized into this “serverless” cost model? (Note: we categorize them from the cost model perspective)

Other than Snowflake, the following options fall into this category:

Google Bigquery

AWS Athena

Though the compute pricing model differs from Snowflake in that it charges a query according to the size of dateset a query touches / scans, we consider they fall into the same “serverless” model because this pricing model is the true-usage-based one. If you are an engineer who want to build something to show the initial results. you don’t need to pay a beefy cluster and worry about blowing up your corp budget.

Every one and every org can build their data stack

Data is the new oil — and there will be a lot of data related needs inside modern organizations. If engineers really want to embark data engineering solution journey, infrastructure is no longer the barrier any more. And if you’d like to acquire a new skill, there is nothing sitting between your technical curiosity and your working code with a successful demo to your team.

Summary

“Serverless” data warehouse platforms have already dramatically changed the infrastructure cost model to data stacks — in a way allowing people to start without up-front commitment.

When engineers say the serverless paradigm saves their infrastructure cost at the service tier, the cost reduction effect on the data stack tier will be even bigger. If you organization has a need, definitely give it a try.

Please let us know your first hands experience.